Technology is entwined with much of our day to day lives, with no better example than the growth of smartphone adoption, a device now seen as a must-have. Payment and banking is almost unrecognisable from ten years ago with online banking, mobile apps, ‘chip and pin’, contact-less payments and online payments.

At events, however, many attendees often find trying to make a simple credit/debit card payment can be a frustrating and unreliable experience. For ourselves as technology providers ‘credit card machines’ or PDQs as they are known, come top of the list of complaints from event organisers, traders and exhibitors.

These problems not only cause frustration for attendees but also present a serious issue in terms of financial return for traders and exhibitors, and their desire to be present at events. It is well documented that the ability to take contactless and chip & pin payments at events increases takings, reduces risks from large cash volumes and can improve flow and trackability.

So why is it such a problem? Much of the issue comes down to poor communication and misinformation on top of what is already a relatively complex environment. Card payments and the machines which can take payments are highly regulated by the banking industry meaning they tend to lag behind other technology, however, this can be overcome and a properly thought through approach can deliver large scale reliable payment systems.

Bad Terminology

A lot of the confusion around PDQ machines comes from the design and terminology used. Although the machines all look the same there are differences in the way they work. Nearly all PDQs use the design of a cradle/base station with a separate handheld unit. The handheld part connects to the base station using Bluetooth. This is where the confusion starts as people often describe these units as ‘wireless’ because of the Bluetooth, however, their actual method of connectivity to the bank may be one of four different types:

- Telephone Line (PSTN – Public Switched Telephone Network) – This is the oldest and, until a few years ago, the most common type of device, it requires a physical telephone line between the PDQ modem and the bank. It’s slow, difficult and very costly to use at event sites because of the need for a dedicated physical phone line, however, once it is working it is reliable.

- Mobile PDQ (GPRS/GSM) – Currently the most common form of PDQ, it uses a SIM card to connect to a mobile network to use GSM or GPRS to connect back to the bank. Originally seen as the go anywhere device, in the right situation they are excellent, however, they have limitations, the most obvious being they require a working mobile network to operate. At busy event sites the mobile networks rapidly become saturated and this means the devices cannot connect reliably. As they use older GPRS/GSM technology they are also very slow – it doesn’t make any difference if you try and use the device in a 4G area – it can only work using GPRS/GSM. As they use the mobile operator networks they may also incur data charges.

- Wi-Fi PDQ – Increasingly common, this version connects to a Wi-Fi network to get its connectivity to the bank. On the surface this sounds like a great solution but there some challenges, firstly it needs a good, reliable Wi-Fi network. The second issue is that many Wi-Fi PDQs still operate on the 2.4GHz Wi-Fi spectrum which on event sites is heavily congested and suffers lots of interference making the devices unreliable. This is not helped by the relative weak Wi-Fi components in a PDQ compared to a laptop for example. It is essential to check that any Wi-Fi PDQ is capable of operating in the less congested 5GHz spectrum.

- Wired IP PDQ – Often maligned because people think it doesn’t have a ‘wireless’ handset, but they are actually the same as all the others and have a wireless handset but it uses a physical wire (cat5) from the base station to connect to a network. In this case the network is a computer network using TCPIP and the transactions are routed in encrypted form across the internet. If a suitable network is available on an event site then this type of device is the most reliable and fastest, and there are no call charges.

All of these units look very similar and in fact can be built to operate in any of the four modes, however, because banks ‘certify’ units they generally only approve one type of connectivity in a particular device. This is slowly starting to change but the vast majority of PDQs in the market today can only operate on one type of connectivity and this is not user configurable.

On top of these aspects there is also the difference between ‘chip & pin’ and ‘contactless’. Older PDQs typically can only take ‘chip & pin’ cards whereas newer devices should also be enabled for contactless transactions.

Myth or Fact

Alongside confusion around the various types of PDQs there is a lot of conflicting and often inaccurate information circulated about different aspects of PDQs. Let’s start with some of the more common ones.

I have a good signal strength so why doesn’t it work?

The reporting of signal strength on devices does nothing but create frustration. Firstly because it is highly inaccurate and crude, and secondly because it means very little – a ‘good’ signal indicator does not mean that the network will work!

The issue is that signal strength does not mean there is capacity on the network, it is frequently the case at event sites that a mobile phone will show full signal strength due to a temporary mobile mast being installed but there is not enough capacity in terms of data to service the devices so the network does not work. A useful analogy is comparing networks to a very busy motorway. You can get on, but you won’t necessarily go anywhere. The same can be true on a poorly designed Wi-Fi network, or a well-designed Wi-Fi network which doesn’t have enough internet capacity.

In fact you can have a low signal strength and still get very good data throughput on a well-designed network. Modern systems also use a technique known as ‘beam-forming’ where a device is not prioritised until it is actually transmitting data which means it may show a low signal strength which increases when it is doing something.

On the flip side your device may show a good signal strength but the quality of the signal may be poor, this could be due to interference, poor design or sometimes even weather & environmental conditions!

Wi-Fi networks are less secure than mobile networks

There are two parts to this, firstly all PDQs encrypt their data no matter what type of connection they use, they have to so that they meet banking standards (PCI-DSS) and protect against fraud. The second aspect is that a well-designed Wi-Fi network is as secure, if not more secure, than a mobile network. A good Wi-Fi network will use authentication, strong encryption and client isolation to protect devices, it should also be the case that all PDQs are connected to a separate ‘virtual network’ to isolate them away from any other devices.

You have to keep logging into the Wi-Fi network

Wi-Fi networks can be configured in many ways but for payment systems there should be no need to keep having to log in. This problem tends to be seen when people are trying to use a payment system on a ‘Public Wi-Fi network’ which will often have a login hijack/splash page and a time limit.

A multi-network M2M GPRS/GSM SIM is guaranteed to work

Sadly this is not true, although a PDQ with a SIM card which can roam between mobile networks and use GPRS or GSM may offer better connectivity, there is no guarantee. Some event sites have little or no coverage from any mobile operator and even where there is coverage, capacity is generally the limiting factor.

Mobile signal boosters will solve my problem

Mobile signal boosters, or more correctly signal repeaters, are used professionally by mobile operators in some circumstances, for example inside large buildings, to create coverage where signal strength is very weak due to their construction (perhaps there is a lot of glass of metal which can reduce signals from outside). In the UK the purchase and use of them by anyone outside of a mobile operator is illegal (they can cause more problems with interference). For temporary event sites they provide little benefit anyway as it is typically a capacity issue which is the root cause of problems.

A Personal hotspot (Mi-Fi) will solve my problem

Personal hotspots or Mi-Fi devices work by connecting to a mobile network to get connectivity and then broadcasting a local Wi-Fi network for devices to connect to. Unfortunately, at event sites where the mobile networks are already overloaded these devices offer little benefit, and even if they can get connected to a mobile network the Wi-Fi aspect struggles against all the other wireless devices. On top of that these devices cause additional interference for any existing on-site network making the whole situation even worse.

The Next Generation & the Way Forward…

The current disrupters in the payment world are the mobile apps with devices such as PayPal Here and iZettle. Although they avoid the traditional PDQ they still require good connectivity, either from the mobile networks or a Wi-Fi network, and hence the root problem still exists.

Increasingly exhibitors are also using online systems to extend their offerings at events via tablets and laptops which also require connectivity. An even better connection is required for these devices as they are often transferring large amounts of data, placing more demands on the network. Even virtual reality is starting to appear on exhibitors stands so there is no doubt that the demand for good connectivity will continue to increase year on year.

What the history of technology teaches us is that demand always runs ahead of capacity. This is especially true when it comes to networks. For mobile operators to deliver the level of capacity required at a large event is costly and complex, and in some cases just not possible due to limits on available wireless spectrum.

4G is a step forward but still comes nowhere close to meeting the need in high demand areas such as events, and that situation will worsen as more people move to 4G and the demand for capacity increases. Already the talk is of 5G but that is many years away.

For events, realistically, the position for the foreseeable future is a mixed one. For small events in a location well serviced by mobile networks with limited requirements then 3G/4G can be a viable option, albeit with risks. No mobile network is guaranteed and performance will always drop as the volume of users increases as it is a shared medium. There are no hard and fast rules around this as there are many factors but in simple terms the more attendees present the lower the performance!

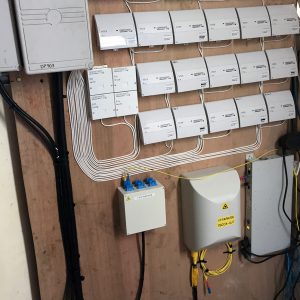

For any sizeable event the best approach is a dedicated event network serviced with appropriate connectivity providing both Wi-Fi and wired connections. This solution facilitates usage for Wired IP-based PDQs, Wi-Fi PDQs, iZettle and other new payment devices, as well as supporting requirements for tablets, laptops and other mobile devices, each managed by appropriate network controls.

With the right design this approach provides the best flexibility and reliability to service the ever-expanding list of payment options. What is particularly important is that an event network is under the control of the event organiser (generally via a specialist contractor) and not a mobile operator, as this removes a number of external risks. For those without existing compatible PDQs the option of rental of a wired or Wi-Fi PDQ can be offered at the time of booking.

The key in all of this is planning and communication, payment processing has to be tightly controlled from a security point of view so it is important that enough time is available to process requests, especially where temporary PDQs are being set-up as they often require around 10 working days.

Sorry to disappoint, but yes our blog last week on

Sorry to disappoint, but yes our blog last week on